Daniel Bedoya defends his thesis, Capturing musical prosody through interactive audiovisual annotations, at the Institut de Recherche et Coordination Acoustique / Musique (IRCAM, 1 place Igor-Stravinsky, 75004 Paris, France) at 10am CET on Wednesday, 18 October 2023.

STMS Events announcement: https://www.stms-lab.fr/article-event-list

YouTube link for remote viewing of the defence: https://www.youtube.com/live/Agq2iZw1ZxY

Daniel is a doctoral candidate at the Sorbonne University and beneficiary of an EDITE contract. His PhD is part of the ERC project COSMOS (Computational Shaping and Modeling of Musical Structures) carried out within the Music Representations Team in the Sciences and Technologies of Music and Sound (STMS) Laboratory – IRCAM, Sorbonne University, CNRS, Ministère de la Culture. Daniel presently holds an attaché temporaire d’enseignement et de recherche (ATER, temporary teaching and research associate) contract at the Conservatoire national des arts et métiers (CNAM) Structural Mechanics and Coupled Systems Laboratory (LMSSC) in Paris.

The defence will take place at Ircam, in English, before a jury comprising of :

- Roberto Bresin, KTH Royal Institute of Technology (Sweden), Rapporteur

- Pierre Couprie, University of Paris-Saclay, Rapporteur

- Jean-Julien Aucouturier, FEMTO-ST, Examiner

- Louis Bigo, Université de Lille, Examiner

- Muki Haklay, University College London, United Kingdom, Examiner

- Anja Volk, University of Utrecht, Netherlands, Examiner

- Carlos Agón, Sorbonne University, Director

- Elaine Chew, King’s College London, United Kingdom, Co-Director

Capturing musical prosody through interactive audiovisual annotations

Abstract: Participatory science (PS) projects have stimulated research in several disciplines in recent years. Citizen scientists contribute to this research by performing cognitive tasks, fostering learning, innovation and inclusion. Although crowdsourcing has been used to collect structural annotations in music, MS remains underused to study musical expressivity.

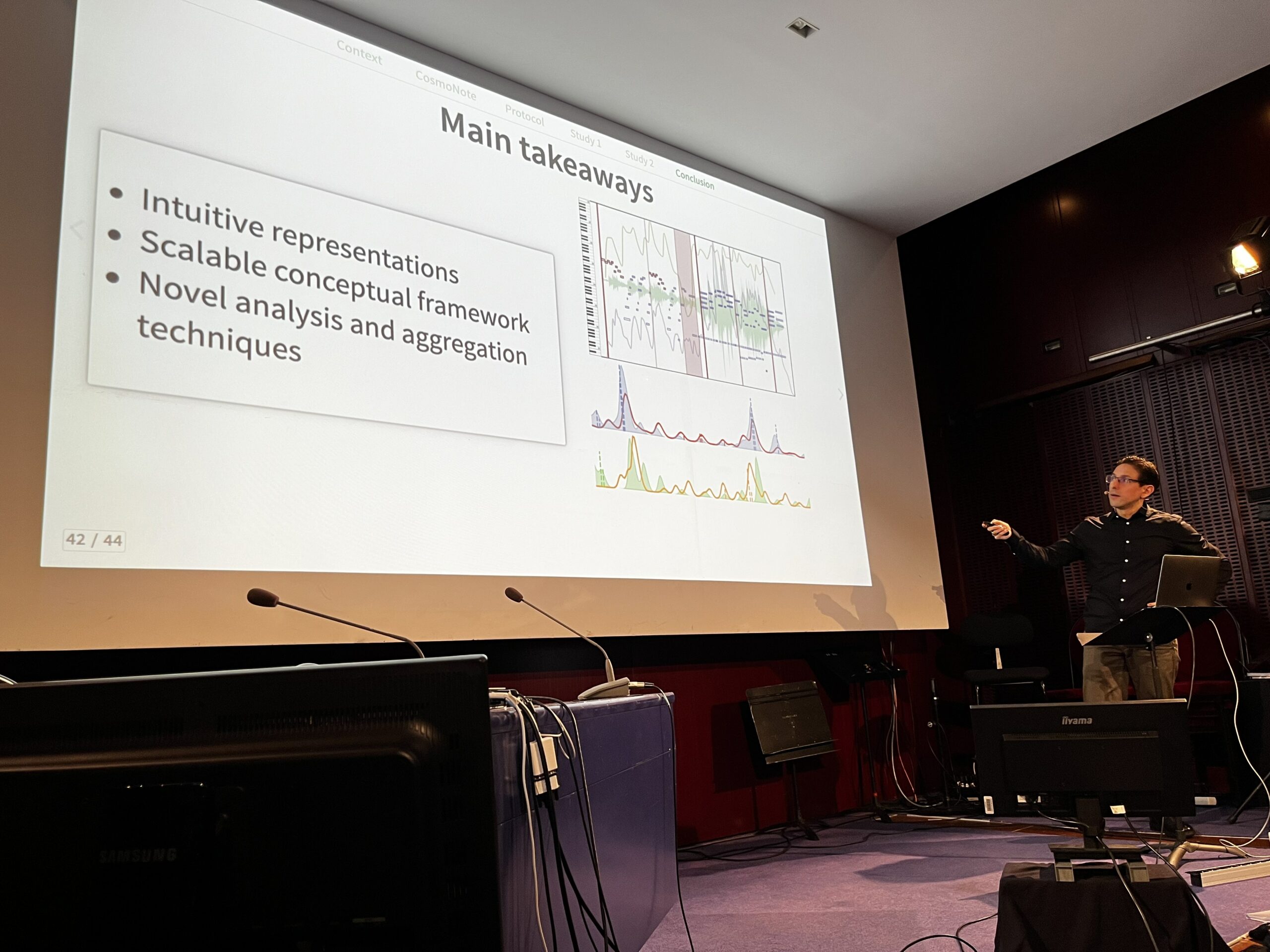

We introduce a new annotation protocol to capture musical prosody, associated with the acoustic variations introduced by performers to make music expressive. Our top-down, human-centred method prioritises the listener in producing annotations of the prosodic features of music. We focus on segmentation and prominence, which convey structure and affect. This protocol provides an MS framework and an experimental approach for conducting systematic and scalable studies.

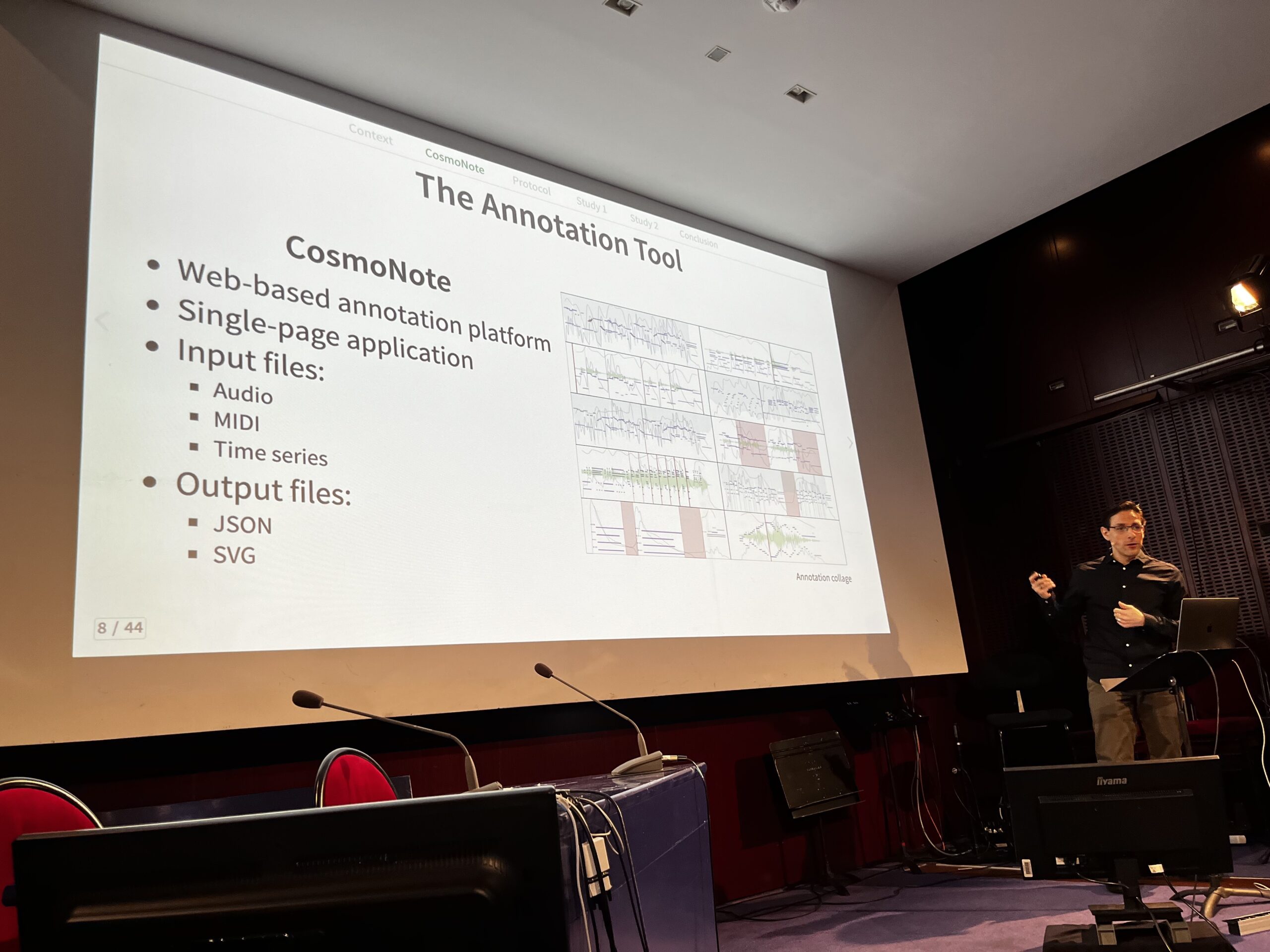

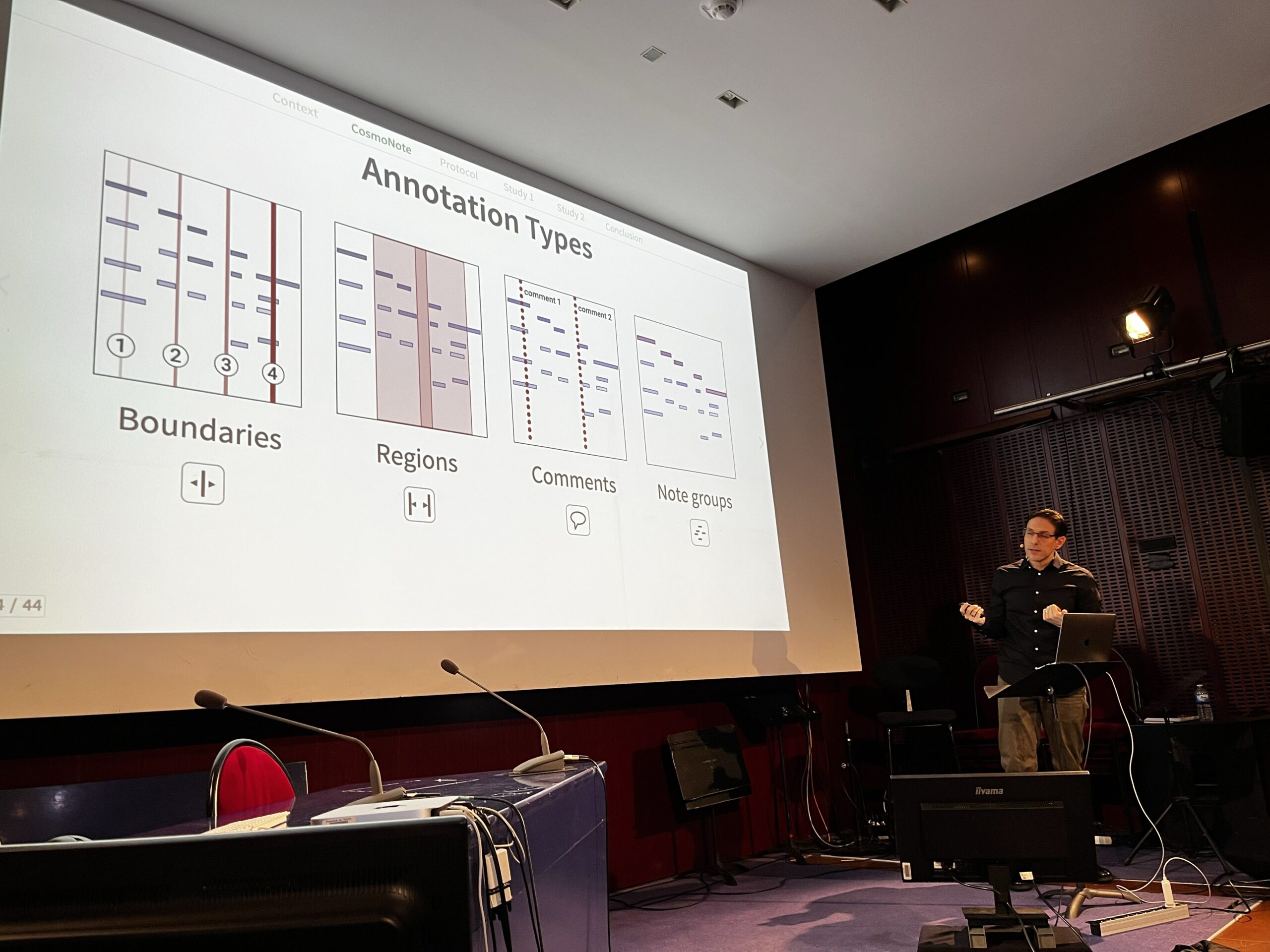

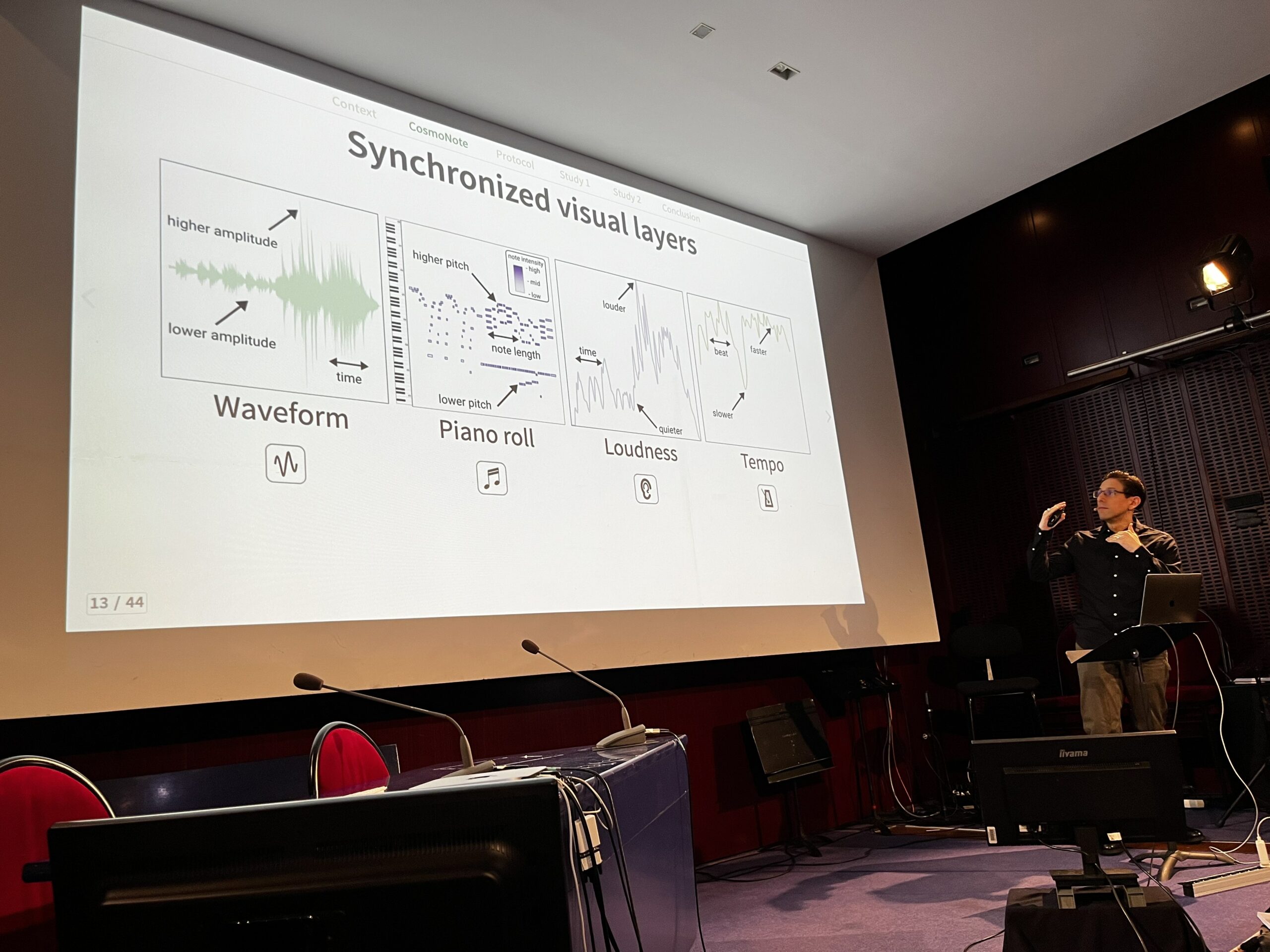

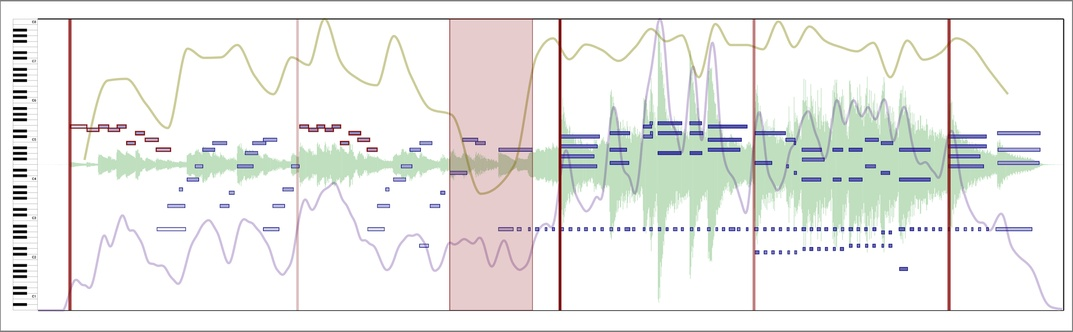

We implement this annotation protocol in CosmoNote, a customizable web-based software designed to facilitate the annotation of expressive musical structures. CosmoNote allows users to interact with visual layers, including the waveform, recorded notes, extracted audio attributes and score features. We can place borders of different levels, regions, comments and groups of notes.

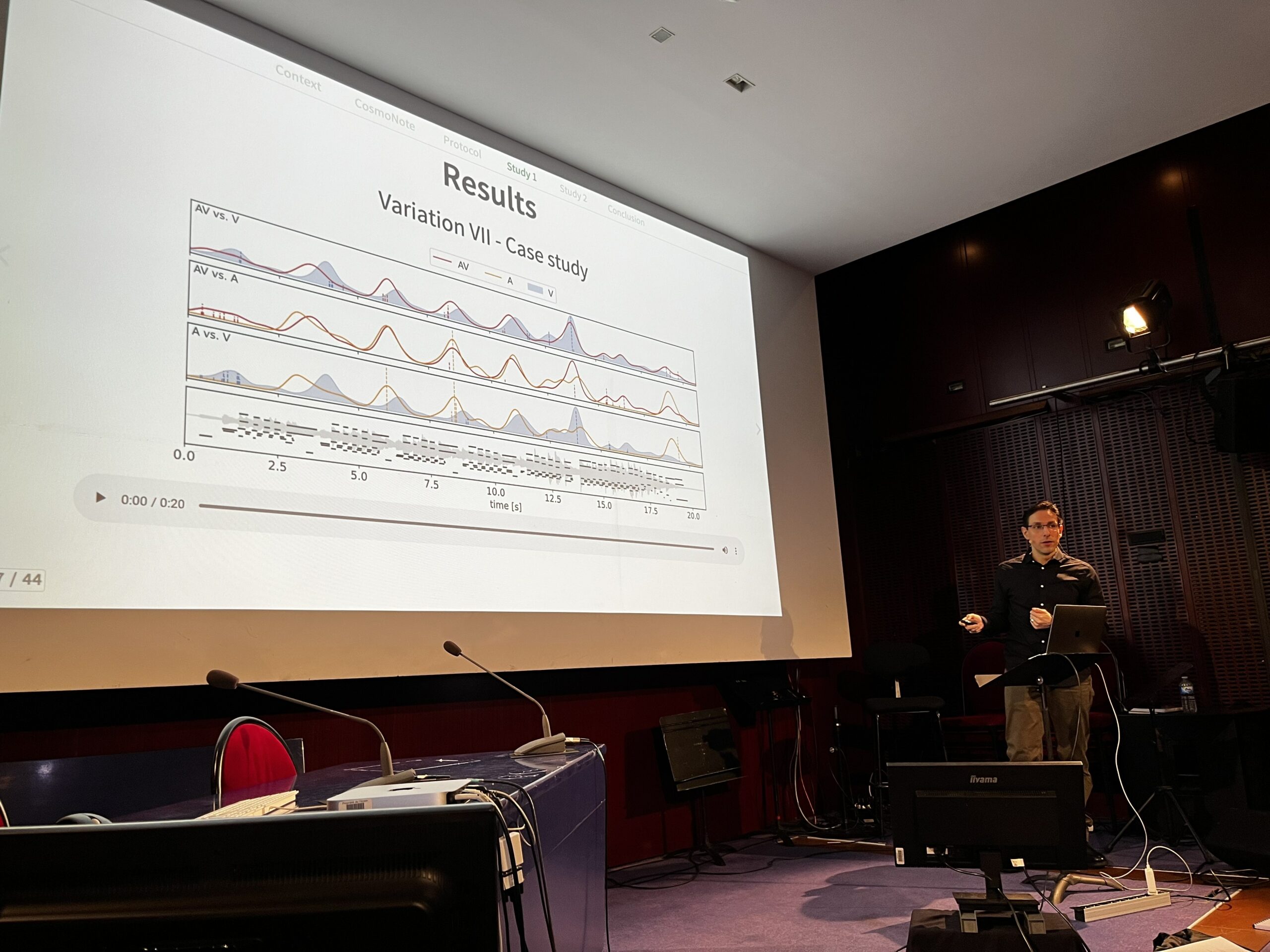

We have conducted two studies to improve the protocol and the platform. The first examines the impact of simultaneous auditory and visual stimuli on segmentation boundaries. We compare the differences in boundary distributions derived from intermodal (auditory and visual) and unimodal (auditory or visual) information. The distances between the unimodal-visual and intermodal distributions are smaller than between the unimodal-auditory and intermodal distributions. We show that the addition of visuals accentuates key information and provides cognitive scaffolding that helps to clearly mark prosodic boundaries, although they may distract attention from specific structures. Conversely, without audio, the annotation task becomes difficult, masking subtle cues. Despite their exaggeration or inaccuracy, visual cues are essential for guiding border annotations in interpretation, improving overall results.

The second study uses all types of CosmoNote annotations and analyses how participants annotate musical prosody, with minimal or detailed instructions, in a free annotation setting. We compare the quality of annotations between musicians and non-musicians. We evaluate the MS component in an ecological setting where participants are completely autonomous in a task where time, attention and patience are valued. We present three methods based on annotation labels, categories and common properties to analyse and aggregate the data. The results show convergence in the types of annotations and descriptions used to mark recurrent musical elements, for any experimental condition and musical ability. We propose strategies for improving the protocol, data aggregation and analysis in large-scale applications.

This thesis enriches the representation and understanding of structures in interpreted music by introducing an annotation protocol and platform, adaptable experiments, and aggregation and analysis methods. We demonstrate the importance of the trade-off between obtaining data that is simpler to analyse and richer content that captures complex musical thinking. Our protocol can be generalised to studies of performance decisions to improve understanding of expressive choices in musical performance.