The International Conference on AI and Musical Creativity (AIMC) grew out of the merger of the Musical Metacreation (MuMe) and the Computer Simulation of Music Creativity (CSMC) conferences in 2020.

The 2023 AIMC was hosted by The Experimental Music Technologies Lab at the University of Sussex and the Intelligent Instruments Lab, the ERC funded project of Thor Magnusson on AI in musical instruments at the Iceland University of the Arts in Reykjavik. The conference took place at the University of Sussex in Brighton 30 August to 1 September.

Dadabots co-creator CJ and Elaine Chew were the keynote speakers, both of whom also joined the Industry Panel moderated by Ollie Brown.

0:01:17 Introduction by Thor

0:03:04 Preface

0:06:43 Lecture begins

0:08:40 Background

0:16:32 Spiral Array Model

0:21:35 Capturing Performance

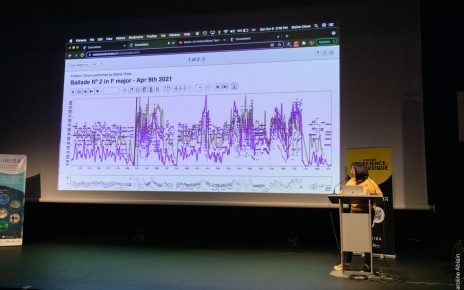

0:36:37 Marking Performed Structures

0:42:36 Music Composition / Generation

0:44:25 Tension and Music Generation

0:52:13 Heartbeat Music

1:01:42 Mouse Music

Keynote by Elaine Chew : Performer-centered AI and Creativity

“When a pianist sits down and does a virtuoso performance he is in a technical sense transmitting more information to a machine than any other human activity involving machinery allows” ~ Robert Moog

Performance is the primary medium through which music is communicated, whether through physical acoustic or electronic instruments. It is the conduit for the flow of artistic and intellectual ideas, and for the shaping of emotional experiences. But capturing the formidable creativity underlying compelling performances presents many challenges, not the least being the fleeting nature of performance and the lack of a written tradition allowing it to be represented and held still for close study. Over a series of vignettes, I shall present a biographical narrative of my search for ways to capture and represent the ineffable know-how of performance from the vantage point of a pianist. The journey spans a range of efforts to name and make models of the things performers do, such as the choreography of tension based on harmony and time, stretching the limits of notational technology to represent performed timings (also applied to arrhythmic sequences), and tapping into the volunteer thinking of citizen scientists to detect the structures performers create and to design or compose a performance. Most recently, the research turns to healthcare, where performed structures are a means to modulate cardiovascular autonomic response.

Ollie Bown (University of New South Wales)

Ryan Groves (Infinite Album, AI Song Contest)

CJ Carr (Dadabots)

Samim Winiger (Okio.ai)

Elaine Chew (King’s College London)