We are pleased to announce a talk by Gershon Dublon at 10h00 CET on Thursday, 1 July 2021, in the Shannon Room, IRCAM, 1 place Igor-Stravinsky, 75004 Paris, France. Gershon is in Paris for a three-month artistic research residency at Clement Duhart’s transdisciplinary research lab at the Pôle Léonard de Vinci in Paris. See video (also at medias.ircam.fr/embed/media/x981600) and talk details below.

Empowering Perception: A Digital Planetary Sensorium

Abstract: Advances in mixed reality and discoveries in perception science offer exciting possibilities of matching human perceptual strengths with vast distributed sensing, AI, and memory resources. In this sphere, audio-based techniques stand to lead the way, both with recent advances in non-occluding, head-tracking headphones and increasing evidence for ambient/peripheral stimuli influencing human mood, attention, and cognition.

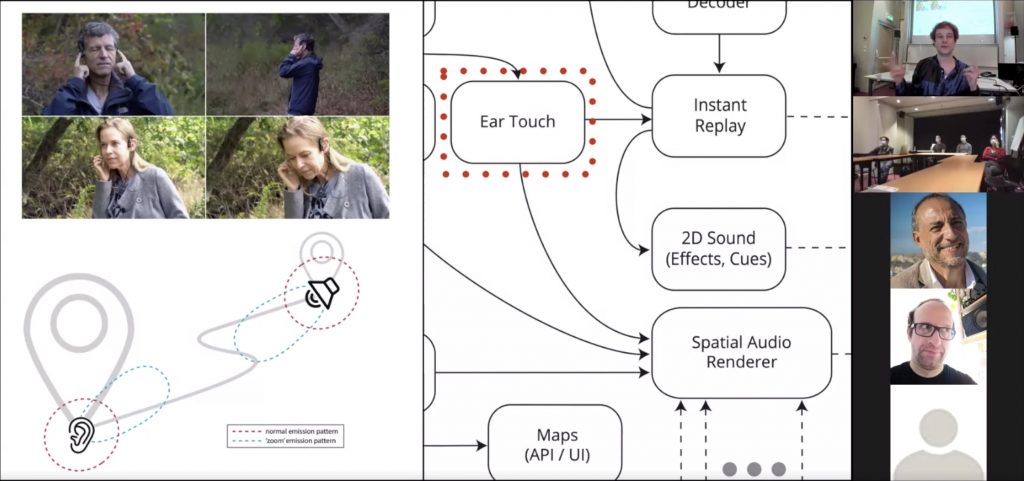

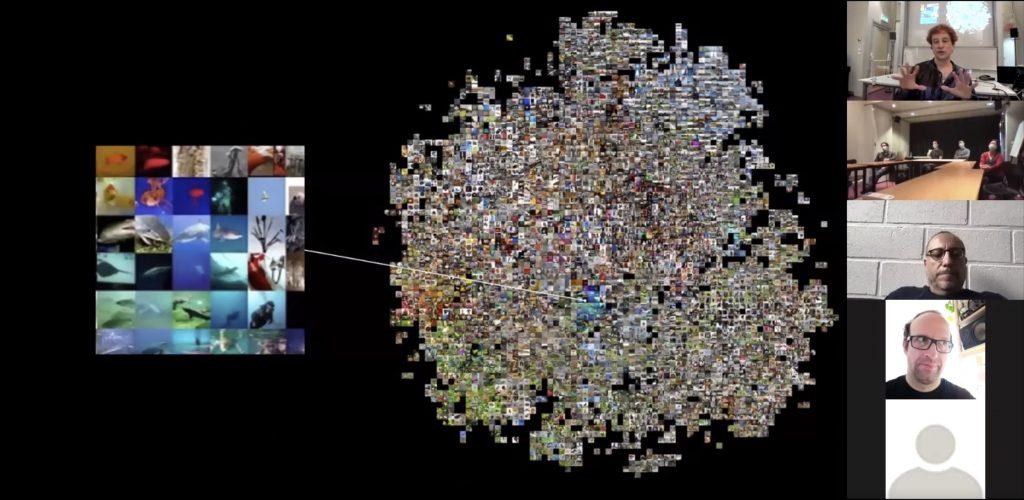

In this talk, I present my doctoral research work moving this vision from the lab into a real-world testbed, focusing on a large-scale environmental sensing system, massive environmental audio dataset, and audio AR wearable devices I developed. In our 577-acre wetlands testbed, users are able to superimpose the past onto the present, observe distant effects of their actions, and explore events from different perspectives. An AI system perceiving sensor data and audio alongside human users can subtly direct user attention by weighting stimuli without explicitly interrupting. In studies, I found that certain digitally-produced illusions, caused by the melding of the digital sense media and the physical world, would resolve into qualitatively new perceptual experiences. Through HearThere, a bone-conducting AR system many users could not separate from their natural hearing, intuited discoveries ranged from self-evident seasonal variations to the intricate discovery of wildlife migration patterns.

Finally, I will touch on ongoing research here in Paris. We aim to create a human-in-the-loop feedback system with sensing and concatenative synthesis on a large corpus of field recordings, applied to sleep staging and meditation/mind-wandering. In its artistic format, this work was recently presented in the form of a live performance system at NIME 2021, and is due at Ars Electronica and other venues later this year. Through this talk and in conversation, I hope to intersect a broad array of IRCAM’s projects and aims: from auditory perception to concatenative synthesis, immersive and spatial audio; ability and access; and broad opportunities at the intersection of audio and human-centered AI.

Biosketch: Gershon Dublon is a researcher and artist-engineer working with sensing, machine learning, and embodied interaction to critically reinvent presence in a world of ubiquitous computing and distributed sensing. His work proposes methods to comprehend massive, longitudinal sensor data and AI systems in the service of a sensory connection to self and environment. Dublon has published articles in Presence (MIT Press), Scientific American, IEEE Sensors, New Interfaces for Musical Expression, Body Sensor Networks, International Conference on Machine Learning, and others, and recently contributed a chapter to the MIT Press book Swamps and the New Imagination. His projects and studio productions have been exhibited in venues and festivals including Boston’s Museum of Fine Arts, Mexico’s National Center for the Arts, Ars Electronica, and the Sundance Film Festival. In 2018, Dublon co-founded slow immediate, a creative engineering studio incubated by The New Museum’s NEW INC program and ONX Studio. He is also a board member of Living Observatory, a Boston-based non-profit organization focused on the future of wetland restoration. Dublon received an SM and PhD from the MIT Media Lab, where his research was supervised by Prof. Joe Paradiso, and a BSEE from Yale.