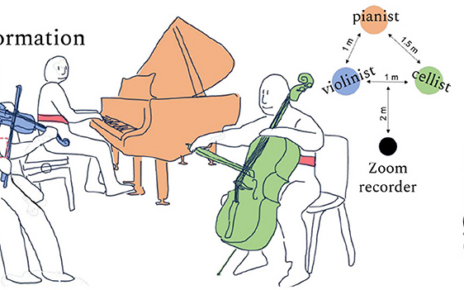

𝐒𝐜𝐢𝐞𝐧𝐜𝐞 𝐨𝐟 𝐦𝐮𝐬𝐢𝐜-𝐛𝐚𝐬𝐞𝐝 𝐜𝐢𝐭𝐢𝐳𝐞𝐧 𝐬𝐜𝐢𝐞𝐧𝐜𝐞: 𝐇𝐨𝐰 𝐬𝐞𝐞𝐢𝐧𝐠 𝐢𝐧𝐟𝐥𝐮𝐞𝐧𝐜𝐞𝐬 𝐡𝐞𝐚𝐫𝐢𝐧𝐠 – now out on PLOS One! High quality annotations of performed music structures is essential for reliable algorithmic analysis of recorded music, with applications from music information retrieval to music therapy. How does seeing and hearing affect citizen science annotation tasks?

Bedoya D, Lascabettes P, Fyfe L, Chew E (2025) Science of music-based citizen science: How seeing influences hearing. PLoS One 20(9): e0325019. https://doi.org/10.1371/journal.pone.0325019

Congratulations all around to the team that made this possible: Daniel Bedoya (first/lead author), Paul Lascabettes, and Lawrence Fyfe

Science of music-based citizen science: How seeing influences hearing

Abstract : Citizen science engages volunteers to contribute data to scientific projects, often through visual annotation tasks. Hearing based activities are rare and less well understood. Having high quality annotations of performed music structures is essential for reliable algorithmic analysis of recorded music with applications ranging from music information retrieval to music therapy. Music annotations typically begin with an aural input combined with a variety of visual representations, but the impact of the visuals and aural inputs on the annotations are not known. Here, we present a study where participants annotate music segmentation boundaries of variable strengths given only visuals (audio waveform or piano roll) or only audio or both visuals and audio simultaneously. Participants were presented with the set of 33 contrasting theme and variations extracted from a through-recorded performance of Beethoven’s 32 Variations in C minor, WoO 80, under differing audiovisual conditions. Their segmentation boundaries were visualized using boundary credence profiles and compared using the unbalanced optimal transport distance, which tracks boundary weights and penalizes boundary removal, and compared to the F-measure. Compared to annotations derived from audio/visual (cross-modal) input (considered as the gold standard for our study), boundary annotations derived from visual (unimodal) input were closer than those derived from audio (unimodal) input. The presence of visuals led to larger peaks in boundary credence profiles, marking clearer global segmentations, while audio helped resolve discrepancies and capture subtle segmentation cues. We conclude that audio and visual inputs can be used as cognitive scaffolding to enhance results in large-scale citizen science annotation of music media and to support data analysis and interpretation. In summary, visuals provide cues for big structures, but complex structural nuances are better discerned by ear.

To learn more, see https://doi.org/10.1371/journal.pone.0325019